This is the year of artificial intelligence. With 2023 we entered the « momentum » in which the various forms of AI have become pop and mainstream, no longer as a subject for specialists, geeks and those interested in the technological world, but as a theme for editorials, essays on customs and a concrete impact on our lives.

Institutions are keeping up with the subject. As noted by MIT Technology Review, we will see the AI regulatory landscape move from vague and general ethical guidelines to concrete regulations. European Union lawmakers in the EU are finalizing a series of rules, and US government agencies such as the Federal Trade Commission are also drafting new legal frameworks.

Lawmakers in Europe are working on rules for AI models that produce images and text, such as Stable Diffusion, LaMDA and ChatGPT. Rules that could signal the end of the era of companies releasing their AI models in total freedom, or with little to no protection or liability.

The models mentioned increasingly form the backbone of many AI applications, yet the companies that build them remain « buttoned up » on how these applications are built and trained. In fact, we don’t know much about how they work, which makes it difficult to understand how the models generate harmful content or distorted results, much less how to mitigate these problems.

The European Union is planning to update its next artificial intelligence regulation, called AI Act, with rules forcing companies to shed light on the inner workings of their AI models. The regulation is likely to be approved in the second half of the year, and after that, companies will need to comply if they want to sell or use AI products in the EU or will face fines of up to 6% of their total annual worldwide turnover.

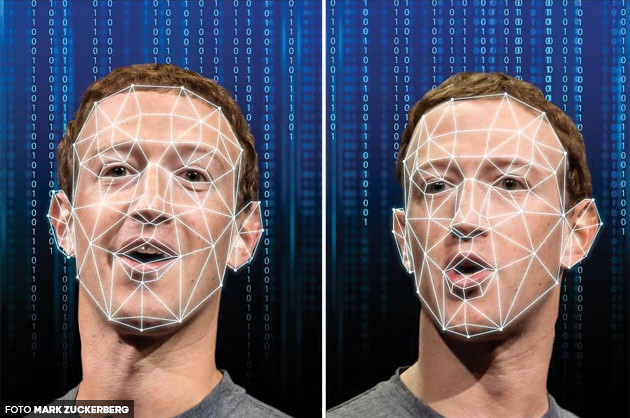

The EU calls these generative models ‘generic AI systems’, because they can be used for many different things (not to be confused with artificial general intelligence). For example, large language models like GPT-3 can be used in customer service chatbots or to create large-scale disinformation. Stable Diffusion can be used to create images for greeting cards or non-consensual deepfake porn.

“While exactly how these models will be regulated in the AI Act is still debated, generic AI modelers, such as OpenAI, Google, and DeepMind, will likely need to be more open about how models are built and trained.” says Dragoș Tudorache, a member of the European Parliament who is part of the team working on the AI law.

Regulating these technologies is tricky, because there are two different sets of problems associated with generative models, with very different policy solutions, says Alex Engler, an AI governance researcher at the Brookings Institution. One is the spread of harmful AI-generated content, such as hate speech and non-consensual pornography, and the other is the prospect of skewed outcomes when companies integrate these AI models into their hiring or to review legal documents.

Engler suggests that creators of generative models should be required to establish restrictions on what models will produce, monitor their results and block users who abuse the technology.

The current push by regulators for greater transparency and corporate accountability could usher in a new era in which AI development is less exploitative and is done in a way that respects rights such as privacy.